- Category

- >Deep Learning

Introduction to Residual Network (ResNet)

- Bhumika Dutta

- Jan 09, 2022

A series of developments in the field of computer vision has occurred in recent years. We are receiving state-of-the-art results on tasks like picture classification and image recognition, especially since the introduction of deep Convolutional neural networks.

As a result, to perform such complex tasks and enhance classification/recognition accuracy, researchers have tended to build deeper neural networks (adding more layers) over time.

However, when we add more layers to the neural network, it gets more difficult to train them, and their accuracy begins to saturate and ultimately decline. ResNet comes to the rescue and assists in the resolution of this issue. We'll learn more about ResNet and its architecture in this blog.

What exactly is ResNet?

ResNet, short for Residual Network, is a form of the neural network developed by Kaiming He, Xiangyu Zhang, Shaoqing Ren, and Jian Sun in their paper "Deep Residual Learning for Image Recognition" published in 2015.

ResNet models were incredibly successful, as evidenced by the following:

1. With a top-5 mistake rate of 3.57 percent, won first place in the ILSVRC 2015 classification competition (An ensemble model)

2. Won first place in ImageNet Detection, ImageNet Localization, Coco Detection, and Coco Segmentation at the ILSVRC and COCO 2015 competitions.

3. ResNet-101 is used to replace VGG-16 layers in Faster R-CNN. They saw a 28 percent improvement in relative terms.

4. Effectively trained networks with 100 and 1000 layers are also available.

(Suggested Blog: What are Skip Connections in Neural Networks? )

The Mathematical Formula:

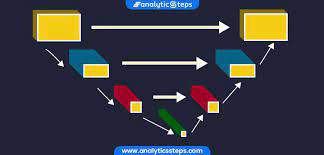

Data travels sequentially through each layer in typical feedforward neural networks, with the output of one layer serving as the input for the next. By skipping some layers, the residual connection provides another way for data to reach later regions of the neural network.

Consider a series of layers, from layer I to layer I + n, and the function F that these layers represent. Layer i's input is denoted by the letter x. x will simply run through these layers one by one in a classic feedforward arrangement, and the result of layer I + n is F. (x).

The residual connection conducts element-wise addition F(x) + x after applying identity mapping to x. A residual block or a building block is the term used in literature to describe the entire architecture that takes an input x and creates an output F(x) + x. An activation function, such as ReLU applied to F(x) + x, is frequently included in a residual block.

The key reason for stressing the seemingly unnecessary identity mapping in the diagram above is that it acts as a placeholder for more complex functions if they are required.

The element-wise addition F(x) + x, for example, only makes sense if F(x) and x have the same dimensions. If their dimensions differ, we can execute F(x) + Wx instead of the identity mapping by using a linear transformation (i.e. multiplication by a matrix W).

Multiple residual blocks, of the same or distinct network topologies, are employed throughout the neural network in general.

Need for ResNet:

We usually stack some additional layers in Deep Neural Networks to address a complex problem, which improves accuracy and performance. The idea behind adding more layers is that these layers would learn increasingly complicated features as time goes on.

In the case of picture recognition, the first layer might learn to recognize edges, the second layer might learn to identify textures, and the third layer might learn to recognize objects, and so on. However, it has been discovered that the classic Convolutional neural network model has a maximum depth threshold.

Error% on training and testing data, (source)

For demonstration, Here is a plot that describes error% on training and testing data for a 20 layer Network and 56 layers Network.

We can see that the error percent for a 56-layer network is higher than a 20-layer network in both training and testing data. This implies that a network's performance diminishes as additional layers are added on top of it. This could be attributed to the optimization function, network setup, and, most critically, the vanishing gradient problem.

You would suppose it's because of overfitting, however, the error percent of the 56-layer network is the worst on both training and testing data, which doesn't happen when the model is overfitted.

How does it help training deep neural networks:

Training a deep network with feedforward neural networks is typically difficult due to issues such as ballooning gradients and disappearing gradients.

However, even if the network includes hundreds of layers, the training process of a neural network with residual connections has been proven to converge significantly more readily.

We don't entirely grasp all the subtleties of residual connection, as we don't fully understand many other deep learning techniques. We do, however, have some intriguing suggestions that are backed up by substantial experimental evidence.

(Suggested Blog: Neural Network Programs/Software )

Dense residual connections:

Dense residual connections are one of the most important updates or adjustments of residual connections. The sum action after the residual block is replaced by a concat operation in a dense residual connection. This essentially doubles the size of the feature vector, resulting in an n-fold increase in feature vector size when repeated.

Deep residual connections lead to significantly larger neural networks that take a long time to train. They do, however, have the advantage of allowing each additional network layer to "see" a portion of previous residual block outputs (kind of like with RNNs and attention). That's more data for the model to work with, and hopefully a better model fit.

Conclusion

While residual connection does not solve the difficulties of exploding or vanishing gradients, it does solve another gradient-related issue.

The gradients of deep feedforward neural networks mimic white noise. This is known as the fractured gradients problem, and it is bad for training. The training process is aided by residual connection, which introduces some spatial structure to the gradients.

( Must Read: Introduction to Graph Neural Network (GNN) )

Trending blogs

5 Factors Influencing Consumer Behavior

READ MOREElasticity of Demand and its Types

READ MOREAn Overview of Descriptive Analysis

READ MOREWhat is PESTLE Analysis? Everything you need to know about it

READ MOREWhat is Managerial Economics? Definition, Types, Nature, Principles, and Scope

READ MORE5 Factors Affecting the Price Elasticity of Demand (PED)

READ MORE6 Major Branches of Artificial Intelligence (AI)

READ MOREScope of Managerial Economics

READ MOREDifferent Types of Research Methods

READ MOREDijkstra’s Algorithm: The Shortest Path Algorithm

READ MORE

Latest Comments