- Category

- >Deep Learning

Introduction To YOLOv4

- Neelam Tyagi

- Jun 25, 2020

“YOLO, you look only once, but more sharper”

From the last few years, the Object detection technique has initiated ripening CNN, also R-CNN ever since it was launched, still, the rivalry remains cut-throat.

Most of the CNN-based object detectors are completely applicable for recommendation systems only, for example, seeking free parking spaces through video cameras is performed by gradual and accurate models.

For enhancing the real-time object detection accuracy allows producing recommendation systems and smart process management and less human input. Also, real-time object detection on the conventional GPU leads to their heavy use at a cheap cost.

In this blog, a new model named YOLOv4 is discussed that has achieved the start-of-the-art results on Microsoft dataset, executing at a real-time speed which is higher than 65 FPS.

What is YOLO?

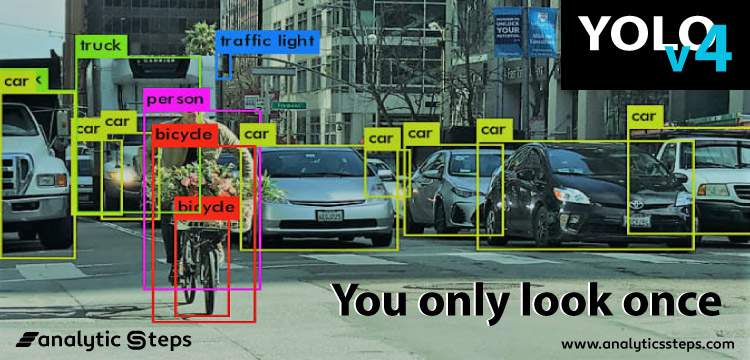

YOLO, as stated, stands for You Only Look Once, it is an object detection system in real-time that recognizes various objects in a single enclosure. Moreover, it identifies objects more rapidly and more precisely than other recognition systems.

It can estimate up to 9000 and even more seen and unseen classes of objects. The real-time recognition system could recognize several objects from a particular image, frame a confined-edge box nearby objects, and quickly trained and implemented in a production system.

Also, It is an achievement in object detection research that yields in better, quicker, and adaptable computer vision algorithms.

How does YOLO work?

As completely based on Convolutional Neural Network(CNN), it isolates a particular image into regions and envisioned the confined-edge box and probabilities of every region. Concurrently, it also anticipates various confined-edge boxes and probabilities of these classes.

YOLO observes the intact image through training and test time in order to encode circumstantial information classes and their features quietly.

Specifying YOLOv4

YOLOv4 outruns the existing methods significantly in both terms “detection performance” and “superior speed”. In reference to a paper published, the research team mentions it as a “speedily operating” object detector that can be trained smoothly and used in production systems.

The main objective was “to optimize neural networks detector for parallel computations”, the team also introduces various different architectures and architectural selections after attentively analyzing the effects on the performance of numerous detector, features suggested in the previous YOLO models.

Three authors “Alexey Bochkovskiy, the Russian developer who built the YOLO Windows version”, “Chien-Yao Wang”, and “Hong-Yuan Mark Liao”, are accounted for in this work and the entire code is available at Github.

YOLOv4 consists of;

-

Backbone: CSPDarknet53,

-

Neck: Spatial Pyramid Pooling additional module, PANet path-aggregation, and

-

Head: YOLOv3

CSPDarknet53 is a unique backbone that augments the learning capacity of CNN, the spatial pyramid pooling section is attached overhead CSPDarknet53 for improving the receptive field and distinguish the highly important context features.

The PANet is deployed in terms of the method for parameter aggregation for distinctive detector levels rather than Feature pyramid networks (FPN) for object detection applied in YOLOv3.

Bag of freebies and Bag of specials

Several improvements can be done during the training process such as data augmentation, class imbalance, cost function, etc to increase accuracy, these changes, and improvements don’t show any impact on inference speed and known as “Bag of freebies”.

Also, such improvements have an impact on the inference time marginally and return in a good performance, known as “Bag of specials”.

These improvements also involve increment of the receptive field, the implementation of attention, feature assimilation like skip-connections & FPN, and post-processing like non-maximum elimination.

Advancement in YOLOv4 in comparison to prior YOLO models;

-

It is a proficient and authoritative object detection model that allows individuals with a 1080 Ti or 2080 Ti GPU to training a very fast and accurate object detector.

-

The consequences of state-of-the-art “Bag-of-Freebies” and “Bag-of-Specials” object detection procedures all the while detector training was confirmed.

-

The converted state-of-the-art methods covering CBN (Cross-iteration batch normalization), PAN (Path aggregation network), that are greater skilled and applicable for single GPU training.

Comparison of YOLOv4 with other models

During the experiments, YOLOv4 achieved an AP value of 43.5% (65.7% of AP50) over he Microsoft COCO dataset, and gained a real-time speed of almost 65 FPS on the Tesla V100, outperforming the swift and highly accurate detectors in the particulars of both “speed and accuracy.”

Speed(AP) vs. Performance(FPS) of YOLOv4 and different model

YOLOv4 is double as rapid as EfficientDet beside corresponding efficiency, also in comparison to YOLOv3, the AP and FPS have enhanced by 10% and 12%, respectively. YOLOv4’s exceptional speed and accuracy fully-described paper are excellent contributions to the scientific realm.

What YOLOv4 deploys?

-

Bag of Freebies for backbone:

CutMix and Mosaic data augmentation, DropBlock regularization, class label smoothing.

-

Bag of Freebies for detector:

CIoU-loss, CmBN, DropBlock regularization, Mosaic data augmentation, Self-Adversarial Training, Eliminate grid sensitivity, Using multiple anchors for single ground truth, Cosine annealing scheduler, Optimal hyper-parameters,

Random training shapes.

“Author’s says;

The influence of state-of-the-art “Bag-of-Freebies” and “Bag-of-Specials” object detection methods during detector training has been verified.”

-

Bag of Specials for backbone:

Mish activation, Cross-stage partial connections (CSP), Multi-input weighted residual connections (MiWRC).

-

Bag of Specials for detector:

Mish activation, SPP-block, SAM-block, PAN path-aggregation block, DIoU-NMS

Catch here how it works and the reason behind being it such amazing

Conclusion

Last but not least, a state-of-the-art detector that is agile (FPS) and more accurate (MS COCO AP50…..95 and AP50) in comparison to other available detectors. The detector can be trained and deployed over a conventional GPU along with 8–16 GB-VRAM due to which it can be used broadly.

Also, the altered state-of-the-art procedure, incorporating CBN (Cross-iteration batch normalization), PAN (Path aggregation network), and much more that are now highly adequate and appropriate for single GPU training.

Continue your learning with recent articles and connect with us on Facebook, Twitter, and LinkedIn.

Trending blogs

5 Factors Influencing Consumer Behavior

READ MOREElasticity of Demand and its Types

READ MOREAn Overview of Descriptive Analysis

READ MOREWhat is PESTLE Analysis? Everything you need to know about it

READ MOREWhat is Managerial Economics? Definition, Types, Nature, Principles, and Scope

READ MORE5 Factors Affecting the Price Elasticity of Demand (PED)

READ MORE6 Major Branches of Artificial Intelligence (AI)

READ MOREScope of Managerial Economics

READ MOREDifferent Types of Research Methods

READ MOREDijkstra’s Algorithm: The Shortest Path Algorithm

READ MORE

Latest Comments